The need for speed - Part 1

Websites are increasing in size, with each passing day. The average web page weighs in at a hefty 2.3 megabytes.

Large pages are detrimental for a number of reasons:

- They take longer to load. If it takes too long, users may abandon the page and go elsewhere.

- They cost more, for users on a metered mobile data plan.

- They may be downgraded to a lower position in the search rankings, by search engines.

Now that we’ve seen the consequences of a large page, we can look at ways to trim the fat.

But first, the obligatory ‘before’ picture:

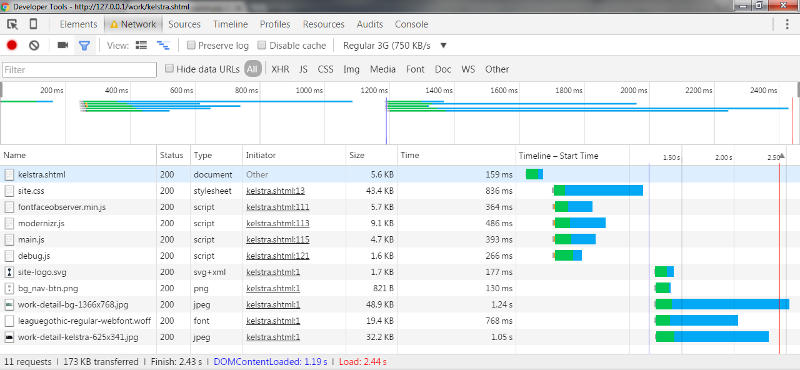

The Google Chrome browser ships with developer tools. These tools display all sorts of useful data, as can be seen in Figure 1.

Since this is an offline test, the network bandwidth has been throttled to Regular 3G. This simulates a typical 3G online connection.

The web page is 173KB in size, the HTML document is loaded and parsed after 1.19 seconds, and the page is fully loaded after 2.44 seconds.

The browser requests eleven files from the server. Each request adds some latency, as the request has to travel all the way from the client to the web server. The requested file then travels all the way back.

The number of requests could be reduced to eight by combining the four JavaScript files into one file. I shall expound upon that particular optimisation in a subsequent article.

The first seven files are good candidates for compression. This is because they are all text files, which typically contain a significant amount of repetitive data.

The last four files are poor candidates for compression. They are binary files which have already been compressed by the image / font manipulation programs that created them.

The rest of this article describes procedures that are specific to the Apache web server. Equivalent procedures for other web servers are beyond the scope of this article.

In order to enable compression on the web server, an htaccess file is placed in the document root directory on the web server. The talented people over at HTML5 Boilerplate maintain such a file. I am a firm believer in not reinventing the wheel, and so I have adopted code from their file.

The complete htaccess file comprises code from multiple files. However, we start with an empty htaccess file and introduce code in stages. This allows us to test each snippet of code in isolation.

Figure 2 shows a few lines from the file, compression.conf. The remaining lines have been omitted for brevity. The full listing may viewed here.

<IfModule mod_setenvif.c>

<IfModule mod_headers.c>

SetEnvIfNoCase ^(Accept-EncodXng|X-cept-Encoding|X{15}|~{15}|-{15})$ ^((gzip|deflate)\s*,?\s*)+|[X~-]{4,13}$ HAVE_Accept-Encoding

RequestHeader append Accept-Encoding "gzip,deflate" env=HAVE_Accept-Encoding

</IfModule>

</IfModule>

The htaccess file, containing just the contents of compression.conf, may be downloaded here. Unzip htaccess.zip to extract.

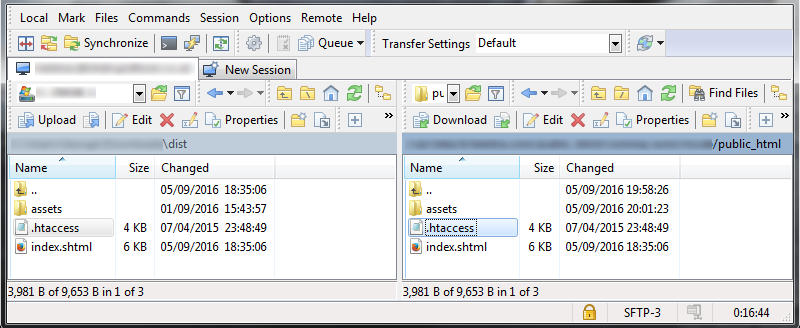

The htaccess file is then uploaded to the document root directory on the web server. This directory is usually named public_html. It may also be named www or httpdocs. Figure 3 shows the local copy of the file on the left, and the uploaded remote copy on the right.

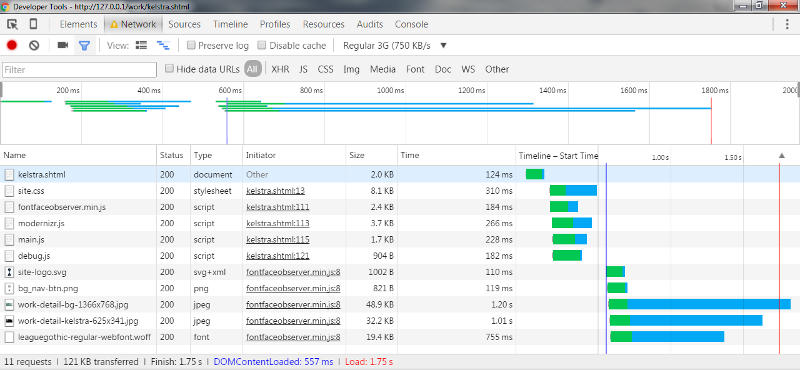

The test is run again. Let’s see what a difference enabling gzip compression can make.

The ‘after’ picture:

| Uncompressed | Compressed | Reduction | |

|---|---|---|---|

| kelstra.shtml | 5.6KB | 2.0KB | 64.29% |

| site.css | 43.4KB | 8.1KB | 81.34% |

| fontfaceobserver.min.js | 5.7KB | 2.4KB | 57.89% |

| modernizr.js | 9.1KB | 3.7KB | 59.34% |

| main.js | 4.7KB | 1.7KB | 63.83% |

| debug.js | 1.6KB | 0.88KB | 45.00% |

| site-logo.svg | 1.7KB | 0.98KB | 42.35% |

| Web page | 173KB | 121KB | 30.06% |

| Uncompressed | Compressed | Reduction | |

|---|---|---|---|

| Web page | 2.44s | 1.75s | 28.28% |

The web page is now 30.06% smaller, and loads 28.28% more quickly. A significant improvement!